Originally published at awsadvent.com

Amazon machine images (AMIs) are the basis of every EC2 instance launched. They contain the root volume and thereby define what operating system or application will run on the instance.

There are two types of AMIs:

- public AMIs are provided by vendors, communities or individuals. They are available on the AWS Marketplace and can be paid or free.

- private AMIs belong to a specific AWS account. They can be shared with other AWS accounts by granting launch permissions. Usually they are either a copy of a public AMI or created by the account owner.

There are several reasons to create your own AMI:

- predefine a template of the software, which runs on your instances. This provides a major advantage for autoscaling environments. Since most, if not all, of the system configuration has already been done, there is no need to run extensive provisioning steps on boot. This drastically reduces the amount of time from instance start to service ready.

- provide a base AMI for further usage by others. This can be used to ensure a specific baseline across your entire organization.

What is Packer

Packer is software from the HashiCorp universe, like Vagrant, Terraform or Consul. From a single source, you can create machine and container images for multiple platforms.

For that Packer has the concept of builder and provisioner.

Builders exist for major cloud providers (like Amazon EC2, Azure or Google), for container environments (like Docker) or classical visualization environments (like QEMU, VirtualBox or VMware). They manage the build environment and perform the actual image creation.

Provisioners on the other hand are responsible for installing and configuring all components, that will be part of the final image. They can be simple shell commands or fully featured configuration management systems like Puppet, Chef or Ansible.

How to use Packer for building AWS EC2 AMIs

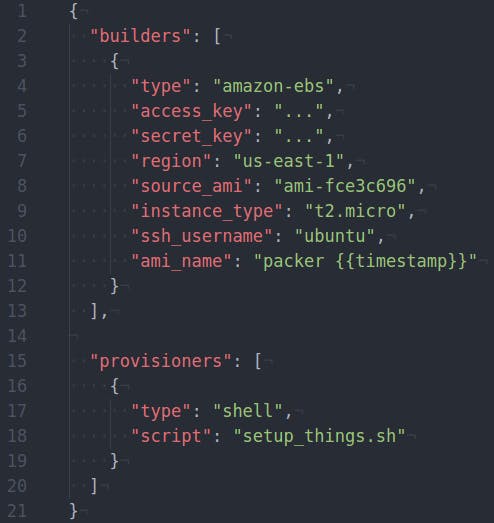

The heart of every Packer build is the template, a JSON file which defines the various steps of each Packer run. Let’s have a look at a very simple Packer template:

There is just one builder and one simple provisioner. On line 4, we specify the amazon-ebs builder which means Packer will create an EBS-backed AMI by:

- launching the source AMI

- running the provisioner

- stopping the instance

- creating a snapshot

- converting the snapshot into a new AMI

- terminating the instance

As this all occurs under your AWS account, you need to specify your access_key and secret_key. Lines 7-9 specify the region, source AMI and instance type that will be used during the build. ssh_username specifies which user Packer will use to ssh into the build instance. This is specific to the source AMI. Ubuntu based AMIs use ubuntu which AWS Linux based AMIs use ec2-user.

Packer will create temporary keypairs and security groups to connect the local system to the build instance to run the provisioner. If you are running packer prior to 0.12.0 watch out for GitHub issue #4057.

The last line defines the name of the resulting AMI. We use the {{timestamp}} function of the Packer template engine which generates the current timestamp as part of the final AMI name.

The provisioner section defines one provisioner of the type shell. The local script “setup_things.sh” will be transferred to the build instance and executed. This is the easiest and most basic way to provision an instance.

A more extensive example

The requirements for a real world scenario usually needs something more than just executing a simple shell script during provisioning. Lets add some more advanced features to the simple template.

Optional sections

The first thing somebody could add is a description and a variables section to the top of our template, like:

{

"description": "Demo Packer template",

"variables": {

"aws_access_key": "",

"aws_secret_key": "",

"base_ami": "ami-b520c0d8",

"base_docker_image": "ubuntu:16.04",

"instance_type": "t2.medium",

"vpc_id": "vpc-12345678",

"subnet_id": "subnet-87654321"

},

[...]

}

The first one is just a simple and optional description of the template. While the second adds some useful functionality. It defines variables, which can later be used in the template. Some of them have a default value, others are optional and can be set during the Packer call. Using packer inspect shows that:

$ packer inspect advanced_template.json

Description:

Demo Packer template

Optional variables and their defaults:

aws_access_key =

aws_secret_key =

base_ami = ami-b520c0d8

base_docker_image = ubuntu:16.04

instance_type = t2.medium

subnet_id = subnet-87654321

vpc_id = vpc-12345678

[...]

Overriding can be done like this:

$ packer build \

-var 'aws_access_key=foo' \

-var 'aws_secret_key=bar' \

advanced_template.json

Multiple builders

The next extension could be to define multiple builders:

{

[...]

"builders": [

{

"type": "amazon-ebs",

"access_key": "{{user `aws_access_key`}}",

"secret_key": "{{user `aws_secret_key`}}",

"region": "us-east-1",

"source_ami": "{{user `base_ami`}}",

"instance_type": "{{user `instance_type`}}",

"vpc_id": "{{user `vpc_id`}}",

"subnet_id": "{{user `subnet_id`}}",

"associate_public_ip_address": true,

"ssh_username": "ubuntu",

"ami_name": "Packer-build DEMO {{timestamp}}",

"ami_description": "Build at {{isotime \"Mon, 02 Jan 2006 15:04:05 MST\"}} based on {{user `base_ami`}}"

},

{

"type": "docker",

"image": "{{user `base_docker_image`}}",

"pull": true,

"commit": true,

"run_command": ["-d", "-i", "--net=host", "-t", "{{.Image}}", "/bin/bash"]

}

]

[...]

}

The amazon-ebs builder was extended by using some of the previously introduced variables. It got a bit more specific about the build environment which will be used on AWS side by defining a VPC, a subnet and attaching a public IP address to the build instance, and also added how the description of the resulting AMI will look.

The second builder defines a build with docker. This is quite useful for testing the provisioning part of the template. Creating an EC2 instance and an AMI afterwards takes some time and resources while building in a local docker environment is faster.

The pull option ensures that the base docker image is pulled if it isn’t already in the local repository. While the commit option is set so that the container will be committed to an image in the local repository after provisioning instead of exported.

Per default, packer will execute all builders which have been defined. This can be useful if you want to build the same image in a different Cloud Provider or in different AWS regions at the same time. In our example we have a special test builder and the actual AWS builder. The following command tells packer to use only a specific builder:

$ sudo packer build -only=docker advanced_template.json

Provisioner

Provisioners are executed sequentially during the build phase. Using the only option you can restrict the provisioner to be called only by the corresponding builder.

{

"provisioners": [

{

"type": "shell",

"script": "bootstrap.sh",

"execute_command": "{{ .Vars }} sudo -E sh '{{ .Path }}'",

"only": ["amazon-ebs"]

},

{

"type": "shell",

"script": "bootstrap.sh",

"only": ["docker"]

},

{

"type": "ansible-local",

"playbook_file": "site.yml"

},

{

"type": "shell",

"script": "cleanup.sh"

}

]

}

This is useful if you need different provisioners or different options for a provisioner. In this example both call the same script to do some general bootstrap actions. One is for the amazon-ebs builder, where we call the script with sudo, and the other is for the docker builder where we don’t need to call sudo, as being root is the default inside a docker container.

The script itself is about upgrading all installed packages and installing Ansible to prepare the next provisioner:

#!/bin/bash

export DEBIAN_FRONTEND=noninteractive

apt-get update

apt-get -y upgrade

apt-get -y install sudo ansible

Now a provisioner of the type ansible-local can be used. Packer will copy the defined Ansible Playbook from the local system into the instance and then execute it locally.

The last one is another simple shell provisioner to do some cleanup:

#!/bin/bash

# Remove Ansible

sudo bash -c "apt-get purge -y ansible"

# Remove temporary stuff

sudo bash -c "apt-get autoremove -y"

sudo bash -c "apt-get clean"

sudo bash -c "rm -rf /tmp/_"

sudo bash -c "rm -rf /home/ubuntu/.ansible"

sudo bash -c "rm -rf /root/.ssh/authorized*keys"

sudo bash -c "rm -rf /usr/sbin/policy-rc.d/"

sudo bash -c "rm -rf /etc/ssh/ssh_host*_"

sudo bash -c "cat /dev/null > /var/log/wtmp"

sudo bash -c "cat /dev/null > /var/log/syslog"

Post-Processors

Post-processors are run right after the image is built. For example to tag the docker image in the local docker repository:

{

"post-processors": [

[

{

"type": "docker-tag",

"only": ["docker"],

"repository": "demo/awsadvent",

"tag": "{{timestamp}}"

}

]

]

}

Or to trigger the next step of a CI/CD pipeline.

Pitfalls

While building AMIs with packer is quite easy in general, there are some pitfalls to be aware of.

The most common is differences between the build system and the instance which will be created based on the AMI. It could be anything from simple things like different instance type to running in a different VPC. This means thinking about what can already be done at build time and what is specific to the environment where an EC2 instance is created based on the build AMI. Other examples, an Apache worker threads configuration based on the amount of available CPU cores, or a VPC specific endpoint the application communicates with, for example, an S3 VPC endpoint or the CloudWatch endpoint where custom metrics are sent.

This can be addressed by running a script or any real configuration management system at first boot time.

Wrapping up

As we have seen, building an AMI with a pre-installed configuration is not that hard. Packer is an easy to use and powerful tool to do that. We have discussed the basic building blocks of a packer template and some of the more advanced options. Go ahead and check out the great Packer documentation which explains this and much more in detail.

All code examples can be found at https://github.com/aruetten/aws-advent-2016-building-amis